A few hours after this morning’s big unveil, Humane opened its doors to a handful of press. Located in a nondescript building in San Francisco’s SoMa neighborhood, the office is home to the startup’s hardware design teams.

An office next door houses Humane’s product engineers, while the electrical engineering team operates out of a third space directly across the street. The company also operates an office in New York, though the lion’s share of the 250-person staff are located here in San Francisco.

Today, much of the space is occupied by a series of demo stations (with a strict no filming policy), where different Ai Pins are laid out in various state of undress, exposing their external machinations. Prior to attending these, however, Humane’s co-founders stand in front of a small group of chairs, flanking a flat screen that lays out the company’s vision.

CEO Bethany Bongiorno gives a brief history of the company, beginning with how she met co-founder and president Imran Chaudhri on her first day at Apple. The company’s entire history ties back to their former employer. It was there they poached CTO Patrick Gates, along with a reported 90 or so other former Applers.

Image Credits: Brian Heater

For his part, Chaudhri frames the company’s story as one of S-curves – 15-year cycles of technology that form the foundation for, and ultimately give way to, what’s next. “The last era has plateaued,” he tells the room, stating that the smartphone is “16 years old” — though this, too, appears to be a winking dig at his former employers, whose first iPhone arrived in 2007.

He frames Humane’s first product as “a new way of thinking, a new sense of opportunity.” It’s an effort, he adds, to “productize AI.” The in-person presentation is decidedly more grounded than earlier videos would lead you to believe. It’s true that the statements are still grandiose and sweeping, contextualizing the lapel-worn device as the next step in a computing journey that began with room-size mainframes, but the conversation becomes a touch more pragmatic when the device is laid out before us.

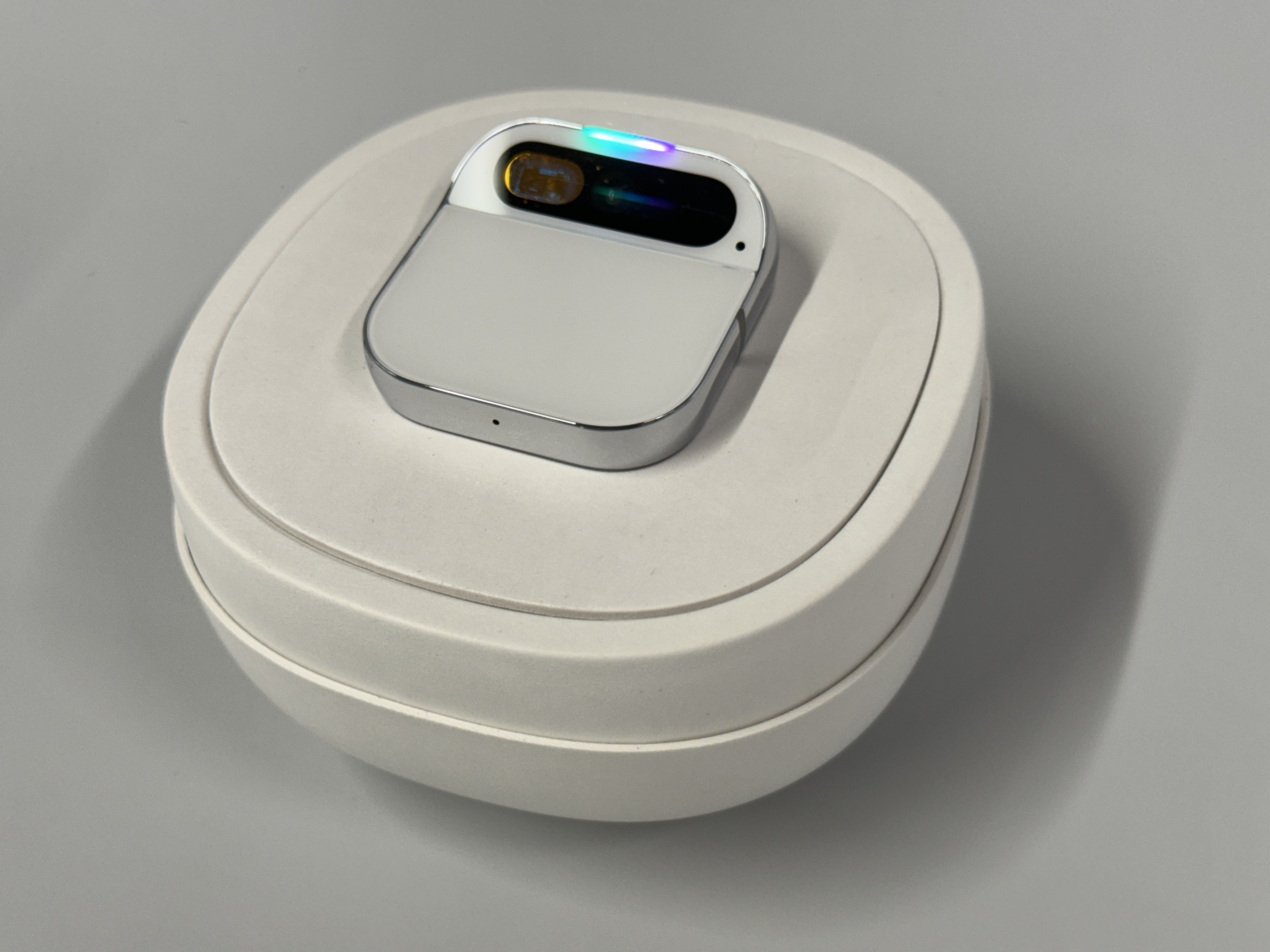

The matchbook-sized device features a Snapdragon processor and 32GB of local storage. The camera is a 12-megapixel sensor designed for a smartphone but integrated into Humane’s own module. There’s an accelerometer and gyroscope and a depth and time of flight sensor. Like Apple’s products, it’s designed in California and primarily manufactured in Asia.

The majority of the device’s exterior is monopolized by a touch panel that houses the majority of the on-board components and a battery that should get four or five hours on a charge. Above this, a kind of camera bar houses the above sensors, along with the laser projection system — far and away the most visually arresting aspect of the whole affair. The camera bar is tilted at a downward angle. Humane says they tested the pin on a variety of different body types and settled on a design that accommodates users with larger chests.

The company also told me that it tested the laser projection with a spectrum of different skin shades, to ensure it would be visible. While visually arresting, the projections are regarded as a secondary feature to what is essentially a voice-first product. If, however, you’re in an environment that’s too loud or too quiet to accommodate the small, upward facing speaker that runs along the top of the device, tap the touchpad and the camera goes to work looking for a hand. Once spotted, it begins projecting.

Image Credits: Brian Heater

Chaudhri demonstrated the feature during a TED Talk back in May. A minute or two in, a staged call comes in from Bongiorno, which the pin projects onto his palm in text form. From here, he can tap his palm to accept or deny the call, with the system identifying the movement and acting accordingly.

The lasers can display far more, however. The show text from messages, which you can scroll through with a pinching gesture on the same hand. They can even display rudimentary previews of the images you shoot, through the green laser doesn’t do the best job highlighting the subtle intricacies of a photo.

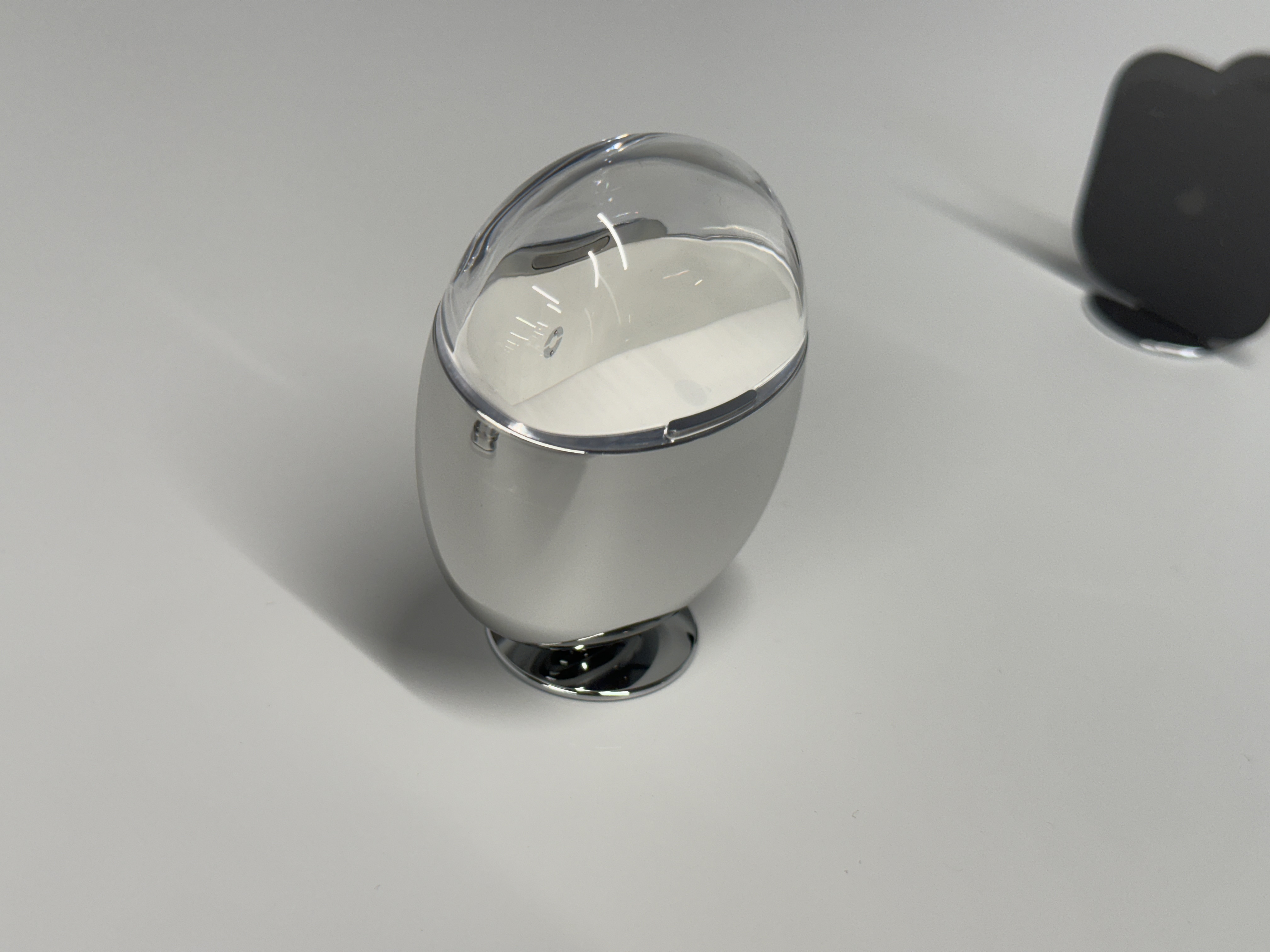

The AI Pin has a bit of weight to it, though this is offset somewhat by the “battery booster” that ships in the box, bringing the full battery life to around nine hours. The product also comes with an egg-shaped case that adds another full charge to the product. You can slip either the Pin, the booster or both in, and they’ll snap in place magnetically. Charging occurs by way of a series of pins on the rear of the device. Also in the box is a charging pad for home use.

Bongiorno confirmed earlier reports of an initial batch of 100,000 units. “I think like with every hardware startup, we want to make sure to plan conservatively for all scenarios,” she says. “For us in the beginning it was really looking at what was the conservative, right and responsible thing to do, in terms of demand and to allow us optionality if our demand goes higher than 100,000.”

She adds that, as of yesterday, more than 110,000 people had signed up for the waiting — though that number is more an indication of curiosity than actual purchasing plans, as no deposit was required. The list is also global, whereas the device is only available for preorder in the U.S., where it will go on sale at some point “early next year.” The initial waiting list group will be given “priority access” to purchase the product.

Image Credits: Brian Heater

The heart of the device is AI. It’s among the first hardware products to ride the current wave of excitement around generative AI, but it certainly won’t be the last. Sam Altman’s name has been closely tied to the startup since the day it was announced. I ask how closely Altman and OpenAI were involved in the product’s creation.

“Sam led our Series A in 2020. Imran was very clear that Sam was the target of the Series A and really wanted him involved,” says Bongiorno. “I think there was a lot of mutual respect and excitement about what we all believed was the future, in terms of computer. He’s been an incredible advocate and supporter of us, and picks up the phone every time we need advice and guidance. We’ve been working with the OpenAI team. Our engineering team collaborated and worked closely together.”

According to Chaudhri, GPT is one of many LLMs being leveraged by the system. He also confirmed that GPT-4 will be among the instances that the system uses. Ultimately, however, the precise AI systems being harnessed for any given task as somewhat murky by design. They’re accessed on a case-by-case basis, based on the pin’s determination of the appropriate course of action.

This also applies to web-based queries. The system crawls a variety of different search engines and resources like Wikipedia. Some will be official content partners, others not. Thus far, the actually partners are limited. There’s OpenAI and Microsoft, as well as Tidal, which serves as the system’s default music app. An example given during one of our demos was “play music produced by Prince,” rather than the more straightforward “play Prince.”

“Part of our AI is proprietary. We build our own AIs, and then we leverage things like GPT and models from OpenAI,” says Bongiorno. “We can add on LLMs and a lot of services from other people, and our goal is to be the platform for everyone and allow access to a lot of different AI experiences and services, so the business model is structured in a way that allows us to do that. And I think we’ll be thinking about different revenue models that we can also add and different revenue streams on the platform.”

Image Credits: Brian Heater

The goal is to make the experience seamless, both in terms of what’s happening on the back end with LLMs and web searches and updates. The system is designed to continually push updates and add new features in the background. It uses additional context as well, including recently asked questions and location, using on-board GPS.

Photos are a big piece of the puzzle as well. The on-board camera has an ultra-wide angle with a 120-degree field of view. There’s no autofocus at play — instead it’s a fixed focal length. In the lights of the SF offices, at least, the photos looked solid. There’s a good bit of computational photography that happens off devices, including accounting for whether the pin is level when taking a shot and orienting the final image accordingly.

Everything still feels very early days here, but it’s clear that a lot of care (and money) went into the product. Demand is perhaps the largest question mark here. Has Humane truly found a killer app? For smartwatch makers, health has long been that answer. But health tracking plays a significantly diminished role here.

The product doesn’t actually come into direct contact with the wearer’s skin, so the health metrics it’s actually capable of collecting are limited beyond perhaps serving as a pedometer — though that feature is not currently supported either. The biggest health-related feature at the moment is calorie counting, specifically telling you how many calories and other nutritional facts are in the piece of food you hold up to the camera, using an unnamed third-party food identification platform.

Image Credits: Brian Heater

Price will certainly be a hurdle for the unproven device — $699 is basically nothing by smartphone standards, but it’s a lot to ask for a first-gen product and new form factor. The added $24 a month doesn’t help, either, though Bongiorno adds, “You’re getting a phone number; you’re getting unlimited talk, text and data; you’re getting as many AI queries as you want, in addition to all of our AI services. Today, we see how much excitement there is around ChatGPT, where people are paying access to that already.”

If you don’t pay that month, however, the product is effectively a paperweight until you start the subscription.

Before our session adjourns, I ask Chaudhri how the company landed on the lapel, of all places, especially when head-worn displays have been seen as the default for some time. Certainly his former employer Apple is betting on the face with its upcoming Vision Pro.

“Contextual compute has always been assumed as something you have to wear on your face,” he says. “There’s just a lot of issues with that. Many people wear glasses that you put on for a really precise reason. It’s either to help you see or to protect your eyes. That’s a very personal decision — the shape of your frame, the weight of your frame. It all goes into something that’s as unique as you are. If you look at the power of context, and that’s the impediment to achieving contextual compute, there has to be another way. So we started looking at what is the piece that allows us to be far more personal? We came up with the fact that all of us wear clothing, so how can we adorn a device that gives us context on our clothing?”